Well, as I promised the last time (a long, long time ago), let’s have a look at GCP’s external load balancer now. While sharing some features with internal load balancer, it has something unique as well:

- ELB meant to be accessed from outside, and “outside” is kind of global, so ELB will tend to use global and regional building blocks.

- It knows about existence of HTTP(S) and can use that knowledge to route traffic to more than one backend service, using URL as a map.

- It also acts as a proxy, so if e.g. SSL ELB is used, it will terminate SSL session way before traffic hits actual instances.

At the moment of writing GCP supports four breeds of ELBs: HTTP, HTTPS, SSL Proxy and TCP Proxy. The one which seems to be the most complex is HTTPS, so for today’s dissecting session let’s prefer that guy over the others.

What HTTPS ELB is made of

If you recall, internal load balancer had the following look:

Structurally, HTTPS external load balancer looks pretty much the same as an internal one, but with a few extra components in the middle (in bold):

- Global forwarding rule

- Target HTTPS proxy

- SSL Certificate

- URL Map

- Backend service with a health check

- Regional managed instance group and an instance template.

Something like this:

In common scenario we also might need a few helper resources. The first one is a firewall rule to allow health check traffic in. The other one, though not required, as otherwise Google will auto assign, is an external IP address.

There’s one more interesting fact about external load balancer. Even though it might serve HTTPS traffic at its public end, internally traffic can remain unencrypted. Such configuration sounds cool enough, so this is exactly what we’re going to build today.

As usual, let’s review the building blocks one by one, starting from the bottom – a managed instance group.

Managed instance group and an instance template

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

resources: - name: http-instance-group-manager type: compute.v1.regionInstanceGroupManager properties: instanceTemplate: $(ref.http-instance-template.selfLink) namedPorts: - name: http port: 80 targetSize: 2 region: us-east1 - name: http-instance-template type: compute.v1.instanceTemplate properties: properties: disks: - autoDelete: true boot: true deviceName: boot initializeParams: sourceImage: projects/ubuntu-os-cloud/global/images/family/ubuntu-1804-lts type: PERSISTENT machineType: f1-micro metadata: items: - key: startup-script value: | sudo apt-get update sudo apt-get install apache2 -y sudo service apache2 restart echo "host:`hostname`" | sudo tee /var/www/html/index.html networkInterfaces: - accessConfigs: - name: External NAT type: ONE_TO_ONE_NAT network: global/networks/default |

There’s nothing unusual about instance template -it’s a regular Ubuntu instance with Apache web server on it. Though instance template declares external IP address, strictly speaking, it’s not required for external load balancing. However, unless we set up some sort of NAT Gateway, external IP address is required for getting access to internet, and our apt-get install instructions will definitely need one.

What’s interesting, unlike the last time, managed instance group (MIG) is regional now, and we’ll be creating two instances in chosen region. Not only it will make load balancing to look like actual balancing, it also introduces high-availability features. MIG will try to allocate instances across the whole region, so if one of its zones fails, we’ll have surviving instances in another one.

Finally, we told MIG through the settings that its instances will expose port 80, here and after named as http.

Backend Service

A group of instances will make us a service, so we’ll declare it as one. In fact, service can refer to more than one MIG, e.g. one in each region, giving another level of high availability. But who needs that now.

|

1 2 3 4 5 6 7 8 9 10 |

- name: http-backend-service type: compute.v1.backendService properties: backends: - group: $(ref.http-instance-group-manager.instanceGroup) healthChecks: - $(ref.http-health-check.selfLink) loadBalancingScheme: EXTERNAL portName: http protocol: HTTP |

Last three lines of the configuration define how we are going to use this service. Here it’s designed for EXTERNAL load balancing, which affects the choices of session affinity, backend service and instance group locations. We also specify what portName will be exposed, and its protocol.

Because backend service requires a health check, and Google’s default firewall settings (ingress – deny all) will block health check traffic, we have to add both a HTTP health check resource and a firewall rule for it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

- name: http-health-check type: compute.v1.httpHealthCheck - name: allow-http-healthcheck type: compute.v1.firewall properties: sourceRanges: - 35.191.0.0/16 - 130.211.0.0/22 allowed: - IPProtocol: tcp ports: - 80 |

URL Map

Now we’re getting to something new. URL Map behaves like a router between incoming traffic and actual backend service that’s going to handle it. Because URL Map knows what HTTP is, it will extract the path component of the URL and choose which of the backend services will handle it. For instance, /media requests can go to one service, /api to another one, etc.

As we have only one backend, we’ll just specify defaultService and route all traffic to it, regardless of the URL.

|

1 2 3 4 |

- name: http-url-map type: compute.v1.urlMap properties: defaultService: $(ref.http-backend-service.selfLink) |

HTTPS Target Proxy and SSL Certificate

We’re almost there. targetHttpsProxy resource is the one that will encrypt HTTP traffic with sslCertificate provided. All it needs is just two resources: an sslCertificate and a urlMap that will take the traffic from here.

|

1 2 3 4 5 6 |

- name: elb-target-https-proxy type: compute.v1.targetHttpsProxy properties: sslCertificates: - $(ref.ssl-cert.selfLink) urlMap: $(ref.http-url-map.selfLink) |

sslCertificate resource is just another Deployment Manager’s resource which holds, you guessed it, an SSL certificate. I simply generated self-signed certificate and copy-pasted its private and public keys to this YAML:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

- name: ssl-cert type: compute.v1.sslCertificate properties: certificate: | -----BEGIN CERTIFICATE----- MIIC/zCCAeegAwIBAgIJAKYi4Cy0C/w7MA0GCSqGSIb3DQEBBQUAMBYxFDASBgNV BAMMC2V4YW1wbGUuY29tMB4XDTE5MDIwMTIyNDM0MloXDTI5MDEyOTIyNDM0Mlow FjEUMBIGA1UEAwwLZXhhbXBsZS5jb20wggEiMA0GCSqGSIb3DQEBAQUAA4IBDwAw ggEKAoIBAQCsJlalGt3kyfOkSOQ071Lk1we9j34UBzgbydAk8C3kLzv72uEHMGFG O6rdDuCo2/DocD8XZUCQclUWYqPsI34GdmmDMZk+axVi5ivp+BBurWhVJqxFeyTX gQiXOL0p9BgvTCgpw2INOIFwX7w+R7or5HYTIcx63Ag7zNR+BueOJyO4baL62jnE 0nPUEE4gIsrhsNwg4b1sU4u2o3KgmXLU3mn/cqmlUwGsEXeIxqXR924cJ8c+Js/B 27exelnZXP8WcxUClZ4M67o+m2Ztb/DwPWTBjeNes8jkMxHEZb1FEtdddhCeMMGO m6uVy2+myXG2VJT2bGzr2T/O98BG8XVnAgMBAAGjUDBOMB0GA1UdDgQWBBTJ1Ele bydjWrqcr8Ex7WyqCgS0YzAfBgNVHSMEGDAWgBTJ1ElebydjWrqcr8Ex7WyqCgS0 YzAMBgNVHRMEBTADAQH/MA0GCSqGSIb3DQEBBQUAA4IBAQAL1+0cAwaU/OhRCHD0 w3y+PbZovMn36nr4ZXKYPNylMYeLKEJPMLh1s71Lj3G2yQYQLF/FGPl2PHajpJDa vLcI09bF1Olfk7fzB4YfkrDi9bOq01Uii1Ocw3udVxekeHQgOsVqQ4iNHw13Kq4j ABgYBspeSKafwA9yNkdqeW3o7fQGnaUU9h/feoW08Cz5RIHbkmd3e5YIWhV8BxT+ V2aMBQwGQt9QnBRfWv6kNhrrXEGcpQ3uCKpCF2xXiYsncd/imbtPv2+IhVxz0l96 FEDYJi4Ctj4X6kklqiFlTEpFOuhKHCj5xvnLk9NWaqLo+oARfIO++gy6zKRGzTLs t6s2 -----END CERTIFICATE----- privateKey: | -----BEGIN RSA PRIVATE KEY----- MIIEpQIBAAKCAQEArCZWpRrd5MnzpEjkNO9S5NcHvY9+FAc4G8nQJPAt5C87+9rh BzBhRjuq3Q7gqNvw6HA/F2VAkHJVFmKj7CN+BnZpgzGZPmsVYuYr6fgQbq1oVSas RXsk14EIlzi9KfQYL0woKcNiDTiBcF+8Pke6K+R2EyHMetwIO8zUfgbnjicjuG2i +to5xNJz1BBOICLK4bDcIOG9bFOLtqNyoJly1N5p/3KppVMBrBF3iMal0fduHCfH PibPwdu3sXpZ2Vz/FnMVApWeDOu6PptmbW/w8D1kwY3jXrPI5DMRxGW9RRLXXXYQ njDBjpurlctvpslxtlSU9mxs69k/zvfARvF1ZwIDAQABAoIBAQCNdMNlz/ndcgT+ TdcXmEBpQjheD3buRjBYxTB/6cwL4LRNc8HNAngsGgOAuiTpHDGNDg8Jzm2LRCee yVchRtjbvplc8HiXza45IiGbk/cMuvksXybXwSS44JKKkFkADE+DLfUivCXp7zCN gl1QX+gfAQ/1EKTRn9Q0L0+8bzf+mdjMVyz57Y9HLP5RzNde34JCQRc50ZrG4vXs ddng915j2jnQMFjFGd/8GdkgojSzMZv0PEglHB5PKudUnKoYZVNRksVJhyCbb3A+ 23KiV6PA0QdiE7Xl2dX3iQf3Z/7+HtCUT9gONu+PNZoZ05vwCui5j/8nwPpZtyL2 drdCa15hAoGBAOI3y0PScaV6JrgrDopoKqacC45vE8MHSi0WcM/jHLWF0Mn1+uJD pWKD7LZCesEZkg+pd7scl94JMdww+H+gqIgjCQzQh1X5VhgblqPc011hJoKNAt4x U9rNQvS9Fhoh2Ik5KUMkO7OhjHbSqEkkX+jHobtnOd2hjVmK7mpXi9RbAoGBAMLQ Sd6I3CWtbxFd65s8pJCmTW2DD8R3MA+8JqoCcvMk6bKfS+MxmHPoOaWOzdrrvqYW ihxJi7vCi6sq/xeFZT/LF6BG1lkaEYSiLINyAWwjddgOMaZD/CPIGy9WhzAa93RU Bp3B/bxhEJtjrGlxtBbgszoLBeb+dRBbh/fJPYDlAoGBAL5+A0GqbZ7N/NrrDwSH 8Rp5ntWjPb3mXpUXJ4o3kk5dT9MxusFb+2G4+9UCqEIBGVjs+PDshAoqLf1gk3FN xX1WG2HaG4zPOKt2V+TGqIoiq/4VZkvat+UxIefbbkg1JhVvuApc8ZUzPYg1nhZx df4cVVns8/Jo/xFfB6Mu84WvAoGBAKLn8E2Rnp43KICaTFH05Rw8pNSl20KL9HnD +YUDJUKjpHUE9j2XFIggMkx6XTPrHPLgOD+tVJb++TJ6cvQlTWSKHUie09GQlgOW ZajJZd0azgmM3QHPKgJ17B2qusOEWVdCiIHVXavwcyWttNg8B791yQoJe7cNI7E5 CTswYijtAoGAVioH74Yoz/XOpNStblNq1/6DtiHlemvZWp/PhnVmg0djo/6TJMx7 xuMSPimvIRZplQInoZmQeg1XX/9a0wJvBQ8CadnAPQ9hi4KsCeFvRK0tapPb+AXH 4lTE2F20zg/gl6PGRYckZbyA778QyKM2TgM8MBoZvqPJFclqyABSpYI= -----END RSA PRIVATE KEY----- |

Global forwarding rule and external IP address

Finally, the entry point of all of this beauty – a global forwarding rule and external IP address for it – predefined or auto assigned.

|

1 2 3 4 5 6 7 8 |

- name: elb-https-forwarding-rule type: compute.v1.globalForwardingRule properties: IPAddress: $(ref.elb-static-ip.selfLink) IPProtocol: TCP loadBalancingScheme: EXTERNAL portRange: 443 target: $(ref.elb-target-https-proxy.selfLink) |

It takes TCP traffic at port 443 from outside world and passes it to targetHttpsProxy and below, thus making resources we created so far – a load balancer.

An external static IP address is tiny two-liner with no particular magic in it. I’m not even choosing what IP address to use – whatever Google picks is fine, I just want it to have a name.

|

1 2 |

- name: elb-static-ip type: compute.v1.globalAddress |

And finally…

After putting all building block into elb_https.yaml, we can deploy the resources and wait. Deployment command executes pretty fast, but it might take five or even more minutes until our external load balancer responds with HTTP 200.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

gcloud deployment-manager deployments create test-elb --config elb_https.yaml # .......... #NAME TYPE STATE ERRORS INTENT #allow-http-healthcheck compute.v1.firewall COMPLETED [] #elb-https-forwarding-rule compute.v1.globalForwardingRule COMPLETED [] #elb-static-ip compute.v1.globalAddress COMPLETED [] #elb-target-https-proxy compute.v1.targetHttpsProxy COMPLETED [] #http-backend-service compute.v1.backendService COMPLETED [] #http-health-check compute.v1.httpHealthCheck COMPLETED [] #http-instance-group-manager compute.v1.regionInstanceGroupManager COMPLETED [] #http-instance-template compute.v1.instanceTemplate COMPLETED [] #http-url-map compute.v1.urlMap COMPLETED [] #ssl-cert compute.v1.sslCertificate COMPLETED [] |

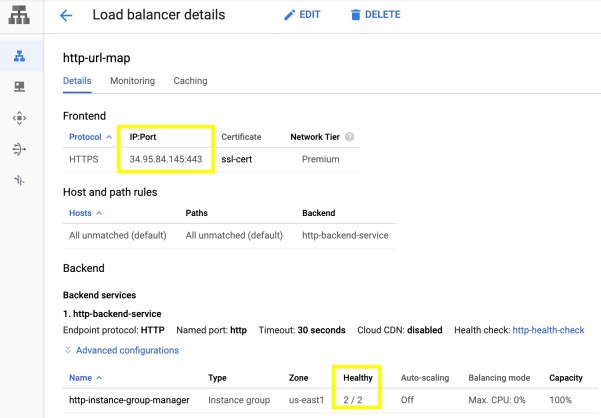

Eventually it happens. Health checks turn green:

MIG recognizes backing instances as healthy:

It all starts to work:

Conclusion

I was going to say something like “You see, that was easy”, but who am I kidding. That wasn’t. However, external load balancer’s building blocks, their order and purpose kinda make sense, and that’s good enough. But I’m definitely keeping this demo ELB configuration as a template in case I need to create one with Deployment Manager in the future.

Thank you so much, this helped me a lot. I would like to ask you a follow up question.

Let’s say we want to use google certificates and automatically associate the static IP to a subdomain managed also by google. Is it possible to achieve this in a clean way or could you point me to the right resource?

It’s been a while since I touched deployment manager, its types support constantly changes, but it should be possible to achieve what you want without much of hacks. I’ve checked

gcloud deployment-manager types listoutput and there are no types for domains or DNS records set. However, Google does have API for those, and you can register them via type providers (https://codeblog.dotsandbrackets.com/type-providers/) and use newly imported types like any other type – instance, sslCertificate, etc. I found DNS API reference momentarily – https://cloud.google.com/dns/docs/reference/v1, and that’s the entry point into the API descriptor URL, which is needed by type provider, as well as the reference of all existing types and their parameters. Basically you’d come up with the list of API calls you’d need to execute in order to create needed resources, and then turn that list of calls into a declarative list of DM resources, importing missing resource types as type providers in the meanwhile.Unfortunately, I can’t be more specific without actually trying to implement the stuff myself, so this is the best I can do for now.